Transfer Learning with TensorFlow : Fine Tuning

Notebook demonstrated Transfer learning using Fine tuning methods in TensorFlow

- Transfer Learning in TensorFlow : Fine Tuning

- Create helper functions

- Get the Data

- Modelling experiments we are going to run:

- Keras Functional API:

- Model 0: Building a transfer learning model using the Keras Functional API

- Getting a Feature vector from a trained model

- Running transfer learning experiments

- Getting and preprocessing data for model_1

- Adding data augmentation into the model

- Visualize our data augmentation layer

- Model 1 : Feature extraction Transfer learning on 1% of data with data augmentation

- Model 2: Feature extraction transfer learning model with 10% of data and data augmentation

- Model 3: Fine-tuning an existing model on 10% of the data

- Model 4: Fine-tuning and existing model on the full dataset

- Viewing our experiment data on TensorBoard

- References:

Transfer Learning in TensorFlow : Fine Tuning

This Notebook is an account of my working for the Udemy course :TensorFlow Developer Certificate in 2022: Zero to Mastery.

Concepts covered in this Notebook:

- Introduce fine-tuning transfer learning with TensorFlow.

- Introduce the Keras Functional API to build models

- Using a small dataset to experiment faster(e.g. 10% of training samples)

- Data augmentation (making your training set more diverse without adding samples)

- Running a series of experiments on our Food Vision data

- Introduce the ModelCheckpoint callback to save intermediate training results.

Create helper functions

In previous notebooks, we have created some helper functions for reusing them while evaluating and visualizing the results of our models.

So, it's a good idea to put functions you'll want to use again in a script you can download and import into your notebooks.

Below we download a file that contains all the functions we have created to help us during the model training in previous notebooks.

!wget https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/helper_functions.py

from helper_functions import create_tensorboard_callback, plot_loss_curves, unzip_data, walk_through_dir

Note: If you are running this notebook in google colab, when it times out colab will delete

helper_functions.py, so you'll have to redownload it if you want access to your helper functions.

!wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_10_percent.zip

unzip_data("10_food_classes_10_percent.zip")

walk_through_dir("10_food_classes_10_percent")

train_dir = "10_food_classes_10_percent/train"

test_dir = "10_food_classes_10_percent/test"

import tensorflow as tf

IMG_SIZE = (224,224)

BATCH_SIZE = 32

train_data_10_percent = tf.keras.preprocessing.image_dataset_from_directory(directory= train_dir,

batch_size= BATCH_SIZE,

image_size = IMG_SIZE,

label_mode = "categorical")

test_data = tf.keras.preprocessing.image_dataset_from_directory(directory= test_dir,

batch_size = BATCH_SIZE,

image_size = IMG_SIZE,

label_mode = "categorical")

train_data_10_percent

train_data_10_percent.class_names

# see an example of a batch data

for images, labels in train_data_10_percent.take(1):

print(images, labels)

Modelling experiments we are going to run:

| Experiment | Data | Preprocessing | Model |

|---|---|---|---|

| Model 0(baseline) | 10 classes of Food101 data(random 10% training data only) | None | Feature Extractor: EfficientNetB0 (pre-trained on ImageNet, all layers frozen) with no top |

| Model 1 | 10 classes of Food101 data(random 1% training dta only) | Random Flip, Rotation, Zoom, Height, Width datat augmentation | Same as Model 0 |

| Model 2 | Same as Model 0 | Same as Model 1 | Same as Model 0 |

| Model 3 | Same as Model 0 | Same as Model 1 | Fine tuning: Model 2 (EfficientNetB0 pre-trained on ImageNet) with top layer trained on custom data, top 10 layers unfrozen |

| Model 4 | 10 classes of Food101 data(100% training data | Same as Model 1 | Same as Model 3 |

Keras Functional API:

# Creating a model with the Functional API

inputs = tf.keras.layers.Input(shape = (28,28))

x = tf.keras.layers.Flatten()(inputs)

x = tf.keras.layers.Dense(64, activation = "relu")(x)

x = tf.keras.layers.Dense(64, activation = "relu")(x)

outputs = tf.keras.layers.Dense(10, activation = "softmax")(x)

functional_model = tf.keras.Model(inputs,outputs,name = "functional model")

functional_model.compile(

loss = tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer = tf.keras.optimizers.Adam(),

metrics =["accuracy"]

)

functional_model.fit(X_train, y_train,

batch_size = 32,

epochs =5)base_model = tf.keras.applications.EfficientNetB0(include_top = False)

# 2. Freeze the base model(so the underlying pre-trained patterns aren't updated)

base_model.trainable = False

# 3. Create inputs into our model

inputs = tf.keras.layers.Input(shape=(224,224,3), name= "input_layer")

# 4. If using a model likeResNet50V2 you will need to normalize the inputs(you don't have to for EfficientNet(s))

# x = tf.keras.layers.experimental.preprocessing.Rescaling(1./255)(inputs)

# 5. Pass the inputs to the base_model

x = base_model(inputs)

print(f"Shape after passing inputs through base model: {x.shape}")

# 6. Average pool the output of the base model(aggregate all the most important info., reduce no. of compuatations)

x = tf.keras.layers.GlobalAveragePooling2D(name = "global_average_pooling_layer")(x)

print(f"Shape after GlobalAveragePooling2D: {x.shape}")

# 7. Create the output activation layer

outputs = tf.keras.layers.Dense(10,activation = "softmax", name = "output_layer")(x)

# 8. Combine the inputs with the ouputs into a model

model_0 = tf.keras.Model(inputs,outputs)

# 9. Compile the model

model_0.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(),

metrics = ["accuracy"])

# 10. Fit the model

history_10_percent = model_0.fit(train_data_10_percent,

epochs = 5,

steps_per_epoch = len(train_data_10_percent),

validation_data = test_data,

validation_steps = int(0.25*len(test_data)),

callbacks = [create_tensorboard_callback(dir_name = "transfer_learning",

experiment_name ="10_percent_feature_extraction")])

model_0.evaluate(test_data)

for layer_number, layer in enumerate(base_model.layers):

print(layer_number, layer.name)

236 layers in EfficientNetB0. The EfficientNetB0 architecture already has the first layers with normalization so we don't need to do the rescaling.

# Let's check the summary of the base model i.e. EfficientNetB0 Model

base_model.summary()

model_0.summary()

import matplotlib.pyplot as plt

plt.style.use('dark_background')

plot_loss_curves(history_10_percent)

Getting a Feature vector from a trained model

Let's demonstrate the Global Average Pooling 2D layer...

We have a tensor after our model goes through base_model of shape (None, 7, 7, 1280).

But then when it passes through GlobalAveragePooling2D, it turns into (None, 1280).

Let's use a similar shaped tensor of (1,4,4,3) and then pass it to GlobalAveragePooling2D.

input_shape = (1,4,4,3)

# Create a random tensor

tf.random.set_seed(42)

input_tensor = tf.random.normal(input_shape)

print(f"Random input tensor: \n {input_tensor} \n")

# Pass the random tensor to the GlobalAveragePooling 2D layer

global_average_pooled_tensor = tf.keras.layers.GlobalAveragePooling2D()(input_tensor)

print(f"2D global average pooled random tensor: \n {global_average_pooled_tensor}\n")

# Check the shape of the different tensors

print(f"Shape of input tensor: {input_tensor.shape}\n")

print(f"Shape of Global Average Pooled 2D tensor : {global_average_pooled_tensor.shape}\n")

tf.reduce_mean(input_tensor, axis = [1,2])

# Define the input shape

input_shape = (1,4,4,3)

# Create a random tensor

tf.random.set_seed(42)

input_tensor = tf.random.normal(input_shape)

print(f"Random input tensor: \n {input_tensor} \n")

# Pass the random tensor to the GlobalAveragePooling 2D layer

global_max_pooled_tensor = tf.keras.layers.GlobalMaxPooling2D()(input_tensor)

print(f"2D global max pooled random tensor: \n {global_max_pooled_tensor}\n")

# Check the shape of the different tensors

print(f"Shape of input tensor: {input_tensor.shape}\n")

print(f"Shape of Global Max Pooled 2D tensor : {global_max_pooled_tensor.shape}\n")

Note: One of the reasons feature extraction transfer learning is named how it is becuase what often happens is pre-trained model outputs a feature vector, a long tensor of number which represents the learned representation of the model on a particular sample, in our case, this is the output of the tf.keras.layers.GlobalAveragePooling2D() layer) which can then be used to extract patterns out of our own specific problem.

Feature Vector:

- A feature vector is a learned representation of the input data (a compressed form of the input data based on how the model sees it)

Running transfer learning experiments

We have seen the incredible results transfer learning can get with only 10% of training data, but how does it go with 1% of training data. We will set up a couple of experiments to find out.

-

model_1- use feature extraction transfer learning with 1% of data with data augmentation. -

model_2- use feature extraction transfer learning with 10% of the training data with data augmentation. -

model_3- use fine-tuning transfer learning on 10% of the training data with data augmentation. -

model_4- use fine-tuning transfer learning on 100% of the training data with data augmentation.

Note: throughout all experiments the same test dataset will be used to evaluate our model. This ensures consistency across evaluation metrics.

!wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_1_percent.zip

unzip_data("10_food_classes_1_percent.zip")

train_dir_1_percent = "10_food_classes_1_percent/train"

test_dir = "10_food_classes_1_percent/test"

walk_through_dir("10_food_classes_1_percent")

IMG_SIZE = (224,224)

BATCH_SIZE = 32

train_data_1_percent = tf.keras.preprocessing.image_dataset_from_directory(train_dir_1_percent,

label_mode = "categorical",

image_size = IMG_SIZE,

batch_size = BATCH_SIZE) # default is 32

test_data = tf.keras.preprocessing.image_dataset_from_directory(test_dir,

label_mode = "categorical",

image_size = IMG_SIZE,

batch_size=BATCH_SIZE)

Let's look at the Food Vision dataset we are using:

| Dataset Name | Source | Classes | Traning data | Testing data |

|---|---|---|---|---|

| pizza_steak | Food101 | pizza,steak(2) | 750 images of pizza and steak(same as original Food101 dataset) | 250 images of pizza and steak (same as original Food101 dataset |

| 10_food_classes_1_percent | Same as above | Chicken curry, Chicken wings, fried rice, grilled salmon, hamburger, ice cream, pizza, ramen, steak, sushi(10) | 7 randomly selected images of each (1% of original training data) | 250 images of each class(same as original Food101 dataset |

| 10_food_classes_10_percent | Same as above | Same as above | 75 randomly selected images of each class(10% of original training data) | Same as above |

| 10_food_classes_100_percent | Same as above | Same as above | 750 images of each class (100% of original training data) | Same as above |

| 101_food_classes_10_percenet | Same as above | All classes from Food101(101) | 75 images of each class (10% of original Food101 dataset) | 250 images of each class (same as original Food101 dataset |

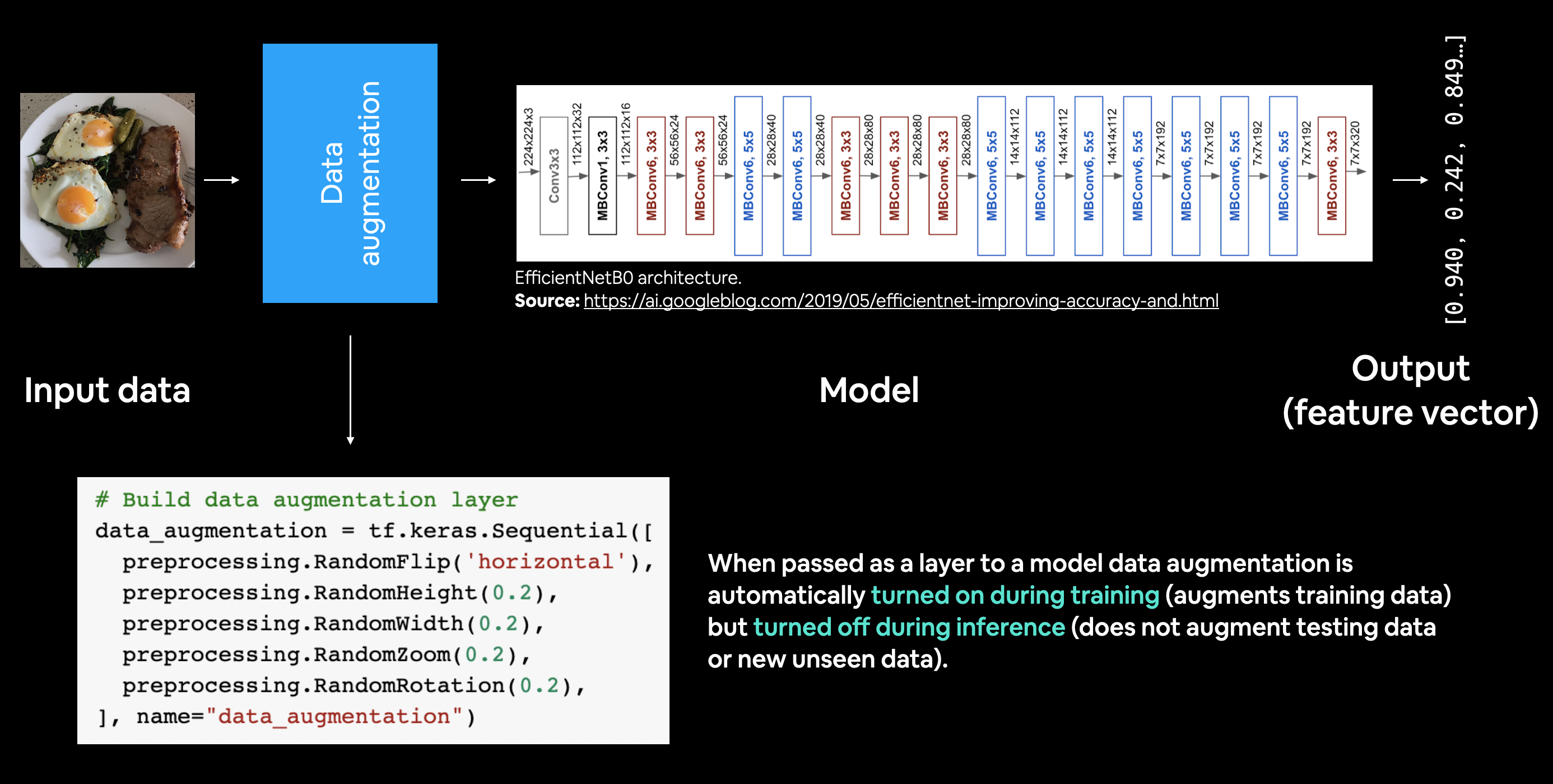

Adding data augmentation into the model

To add data augmentation right into our models, we can use the layers inside:

-

tf.keras.layers.experimental.preprocessing()

We can see the benefits of doing this withing the TensorFlow data augmentation documentation: https://www.tensorflow.org/tutorials/images/data_augmentation

Main Benefits:

- Preprocessing of image (augmenting them) happens on the GPU (much faster) rather than CPU.

- Image data augmentation only happens during training, so we can still export our whole model and use it elsewhere.

Example of using data augmentation as the first layer within a model (EfficientNetB0).

Example of using data augmentation as the first layer within a model (EfficientNetB0).

The data augmentation transformations we're going to use are:

- RandomFlip - flips image on horizontal or vertical axis.

- RandomRotation - randomly rotates image by a specified amount.

- RandomZoom - randomly zooms into an image by specified amount.

- RandomHeight - randomly shifts image height by a specified amount.

- RandomWidth - randomly shifts image width by a specified amount.

-

Rescaling - normalizes the image pixel values to be between 0 and 1, this is worth mentioning because it is required for some image models but since we're using the

tf.keras.applicationsimplementation ofEfficientNetB0, it's not required.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.layers.experimental import preprocessing

# Create data augmentation stage with horizontal flipping, rotation, zoom, rotations etc.

data_augmentation = keras.Sequential([

preprocessing.RandomFlip("horizontal"),

preprocessing.RandomRotation(0.2),

preprocessing.RandomZoom(0.2),

preprocessing.RandomHeight(0.2),

preprocessing.RandomWidth(0.2),

# preprocessing.Rescale(1./255) # Keep for model like ResNet50V2 but Efficient has in-built rescaling

], name = "data_augmentation")

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import os

import random

target_class = random.choice(train_data_1_percent.class_names) # choose a random class

target_dir = "10_food_classes_1_percent/train/" + target_class # create the target directory

random_image = random.choice(os.listdir(target_dir)) # choose a random image from target directory

random_image_path = target_dir + "/" + random_image # create the choosen random image path

img = mpimg.imread(random_image_path) # read in the chosen target image

plt.imshow(img) # plot the target image

plt.title(f"Original random image from class: {target_class}")

plt.axis(False); # turn off the axes

# Augment the image

augmented_img = data_augmentation(tf.expand_dims(img, axis=0)) # data augmentation model requires shape (None, height, width, 3)

plt.figure()

plt.imshow(tf.squeeze(augmented_img)/255.) # requires normalization after augmentation

plt.title(f"Augmented random image from class: {target_class}")

plt.axis(False);

input_shape = (224,224,3)

base_model = tf.keras.applications.EfficientNetB0(include_top = False)

base_model.trainable = False

# Create input layers

inputs = layers.Input(shape = input_shape)

# Add in data augmentation Sequential model as a layer

x = data_augmentation(inputs)

# Give base model the inputs (after augmentation) and don't train it

x = base_model(x, training = False)

# Pool output features of the base model

x = layers.GlobalAveragePooling2D()(x)

# Put a dense layer on as the output

outputs = layers.Dense(10, activation = "softmax", name= "output_layer")(x)

# Make a model using the inputs and outputs

model_1 = keras.Model(inputs,outputs)

# Compile the Model

model_1.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(),

metrics = ["accuracy"])

# Fit the Model

history_1_percent = model_1.fit(train_data_1_percent,

epochs = 5,

steps_per_epoch = len(train_data_1_percent),

validation_data = test_data,

validation_steps = int(0.25*len(test_data)),

callbacks = [create_tensorboard_callback(dir_name="transfer_learning",

experiment_name = "1_percent_data_aug")])

model_1.summary()

results_1_percent_data_aug = model_1.evaluate(test_data)

results_1_percent_data_aug

plot_loss_curves(history_1_percent)

#!wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_10_percent.zip

##unzip_data(10_food_classes_10_percent)

train_dir_10_percent = "10_food_classes_10_percent/train"

test_dir = "10_food_classes_10_percent/test"

IMG_SIZE

walk_through_dir("10_food_classes_10_percent")

import tensorflow as tf

IMG_SIZE = (224,224)

train_data_10_percent = tf.keras.preprocessing.image_dataset_from_directory(train_dir_10_percent,

label_mode = "categorical",

image_size = (224,224))

test_data = tf.keras.preprocessing.image_dataset_from_directory(test_dir,

label_mode = "categorical",

image_size = IMG_SIZE)

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.layers.experimental import preprocessing

from tensorflow.keras.models import Sequential

# Build data augmentation layer

data_augmentation = Sequential([

preprocessing.RandomFlip('horizontal'),

preprocessing.RandomHeight(0.2),

preprocessing.RandomWidth(0.2),

preprocessing.RandomZoom(0.2),

preprocessing.RandomRotation(0.2),

# preprocessing.Rescaling(1./255) # keep for ResNet50V2, remove for EfficientNet

], name="data_augmentation")

# Setup the input shape to our model

input_shape = (224, 224, 3)

# Create a frozen base model

base_model = tf.keras.applications.EfficientNetB0(include_top=False)

base_model.trainable = False

# Create input and output layers

inputs = layers.Input(shape=input_shape, name="input_layer") # create input layer

x = data_augmentation(inputs) # augment our training images

x = base_model(x, training=False) # pass augmented images to base model but keep it in inference mode, so batchnorm layers don't get updated: https://keras.io/guides/transfer_learning/#build-a-model

x = layers.GlobalAveragePooling2D(name="global_average_pooling_layer")(x)

outputs = layers.Dense(10, activation="softmax", name="output_layer")(x)

model_2 = tf.keras.Model(inputs, outputs)

# Compile

model_2.compile(loss="categorical_crossentropy",

optimizer=tf.keras.optimizers.Adam(lr=0.001), # use Adam optimizer with base learning rate

metrics=["accuracy"])

model_2.summary()

Creating a ModelCheckpoint Callback

- Callbacks are a took which can add helpful functionality to your models during training, evaluation or inference.

- Some popular callbacks include:

| Callback name | Use Case | Code |

|---|---|---|

| TensorBoard | Log the performance of multiple models and then view and compare these models in a visual way on Tensor Board. Helpfule to compare teh results of different models on your data | tf.keras.callbacks.TensorBoard() |

| Model Checkpointing | Save your model as it trains so you can stop training if needed and come back to continue off where you left. Helpful if training takes a long time and can't be done in one sitting | tf.keras.callbacks.ModelCheckpoint() |

| Early Stopping | Leave your model training for arbitray amount of time and have it stop training automatically when it ceases to improve. Helpful when you've got a large dataset and don't know long training will take | tf.keras.callbacks.EarlyStopping() |

checkpoint_path = "ten_percent_model_checkpoints_weights/checkpoint.ckpt"

# Create a modelcheckpoint callback that saves the model's weights only

checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(filepath = checkpoint_path,

weights_only = True,

save_best_only = False,

save_freq= "epoch", #save every epoch

verbose = 1)

initial_epochs = 5

history_10_percent_data_aug = model_2.fit(train_data_10_percent,

epochs = initial_epochs,

validation_data= test_data,

validation_steps = int(0.25* len(test_data)),

callbacks = [create_tensorboard_callback(dir_name = "transfer_learning",

experiment_name = "10_percent_data_aug"),

checkpoint_callback])

model_0.evaluate(test_data)

results_10_percent_data_aug = model_2.evaluate(test_data)

results_10_percent_data_aug

plot_loss_curves(history_10_percent_data_aug)

model_2.load_weights(checkpoint_path)

loaded_weights_model_results = model_2.evaluate(test_data)

results_10_percent_data_aug == loaded_weights_model_results

model_2.layers

for layer in model_2.layers:

print(layer, layer.trainable)

# Checkout the trainable layers in our model (EfficientNetB0)

for i, layer in enumerate(model_2.layers[2].layers):

print(i, layer.name , layer.trainable)

print(len(model_2.layers[2].trainable_variables))

Currently there are zero trainable variables in our base model

base_model.trainable = True

# Freeze all layers except for the last 10

for layer in base_model.layers[:-10]:

layer.trainable= False

# Recompile the model (we have to recompile our model every time we make change)

model_2.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(lr = 0.0001), # when fine-tuning you typically want to lower the lr by 10x

metrics = ["accuracy"])

Note: When using fine-tuning it's best practics to lower your learning rate. This is a hyperparamter you can tune. But a good rule of thumb is at least 10x (though different sources will claim other values)

Resource: Universal Language Model Fine-tuning for Text Classification paper by Jeremy Howard and Sebastian Ruder.

# Check which layers are tunable(trainable)

for layer_number, layer in enumerate(model_2.layers[2].layers):

print(layer_number, layer.name, layer.trainable)

print(len(model_2.trainable_variables))

fine_tune_epochs = initial_epochs +5

# Refit the mdoel(same as model_2 except with more trainable layers)

history_fine_10_percent_data_aug = model_2.fit(train_data_10_percent,

epochs = fine_tune_epochs,

validation_data = test_data,

validation_steps = int(0.25* len(test_data)),

initial_epoch = history_10_percent_data_aug.epoch[-1], #start training from prev last epoch

callbacks = [create_tensorboard_callback(dir_name = "transfer_learning",

experiment_name = "10_percent_fine_tune_last_10")])

results_fine_tune_10_percent = model_2.evaluate(test_data)

plot_loss_curves(history_fine_10_percent_data_aug)

The plot_loss_curves function works great with models which have only been fit once, however, we want something to compare one series of running fit() with another(e.g. before and after fine-tuning)

def compare_history(original_history, new_history, initial_epochs = 5):

"""

Compares two TensorFlow History objects

"""

# Get original history measurements

acc = original_history.history["accuracy"]

loss = original_history.history["loss"]

val_acc = original_history.history["val_accuracy"]

val_loss = original_history.history["val_loss"]

# Combine original history

total_acc = acc + new_history.history["accuracy"]

total_loss = loss + new_history.history["loss"]

total_val_acc = val_acc + new_history.history["val_accuracy"]

total_val_loss = val_loss + new_history.history["val_loss"]

# Make plot for Accuracy

plt.figure(figsize = (8,8))

plt.subplot(2,1,1)

plt.plot(total_acc, label = "Training Accuracy")

plt.plot(total_val_acc, label = "Val Accuracy")

plt.plot([initial_epochs-1, initial_epochs-1], plt.ylim(), label = "Start Fine tuning")

plt.legend(loc = "lower right")

plt.title("Trainable and Validation Accuracy")

# Make plot for Loss

plt.figure(figsize = (8,8))

plt.subplot(2,1,2)

plt.plot(total_loss, label = "Training Loss")

plt.plot(total_val_loss, label = "Val Loss")

plt.plot([initial_epochs-1, initial_epochs-1], plt.ylim(), label = "Start Fine tuning")

plt.legend(loc = "upper right")

plt.title("Trainable and Validation Accuracy")

compare_history(history_10_percent_data_aug,

history_fine_10_percent_data_aug,

initial_epochs = 5)

!wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_all_data.zip

unzip_data("10_food_classes_all_data.zip")

train_dir_all_data = "10_food_classes_all_data/train"

test_dir = "10_food_classes_all_data/test"

walk_through_dir("10_food_classes_all_data")

import tensorflow as tf

IMG_SIZE = (224,224)

train_data_10_classes_full = tf.keras.preprocessing.image_dataset_from_directory(train_dir_all_data,

label_mode = "categorical",

image_size = IMG_SIZE)

test_data = tf.keras.preprocessing.image_dataset_from_directory(test_dir,

label_mode = "categorical",

image_size = IMG_SIZE)

The test dataset we have loaded in is the same as what we've been using for previous experiments( all experiments have used the same test dataset).

Let's verify this...

model_2.evaluate(test_data)

results_fine_tune_10_percent

To train a fine-tuning model (model_4) we need to revert model_2 back to its feature extraction weights

# the same stage the 10 percent data model was fine-tuned from

model_2.load_weights(checkpoint_path)

model_2.evaluate(test_data)

results_10_percent_data_aug

What we have done till now:

-

Trained a feature extraction transfer learning model for 5 epochs on 10% of the data with data augmentation (model_2) and we saved the model's weights using

ModelCheckpointcallback. -

Fine-tuned the same model on the same 10% of the data for further 5 epochs with the top 10 layers of the base model unfrozen (model_3).

-

Saved the results and training logs each time

-

Reloaded the model from step 1 to do the same steps as step 2 except this time we are going to use all the data (model_4).

for layer_number, layer in enumerate(model_2.layers):

print(layer_number, layer.name, layer.trainable)

# Let's drill into our base_model (EfficientNetB0) check what layers are trainable

for layer_number, layer in enumerate(model_2.layers[2].layers):

print(layer_number, layer.name, layer.trainable)

model_2.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(lr = 0.0001),

metrics = ["accuracy"])

fine_tune_epochs = initial_epochs + 5

history_fine_10_classes_full = model_2.fit(train_data_10_classes_full,

epochs = fine_tune_epochs,

validation_data = test_data,

validation_steps = int(0.25*len(test_data)),

initial_epoch = history_10_percent_data_aug.epoch[-1],

callbacks = [create_tensorboard_callback(dir_name = "transfer_learning",

experiment_name = "full_10_classes_fine_tune_last_10")])

results_fine_tune_full_data = model_2.evaluate(test_data)

results_fine_tune_full_data

compare_history(original_history= history_10_percent_data_aug,

new_history = history_fine_10_classes_full,

initial_epochs = 5)

# Upload TensorBoard dev records

#!tensorboard dev upload --logdir ./transfer_learning \

# --name "Transfer Learning Experiments with 10 FOOD101 classes" \

# --description " A series of different transfer learning experiments with varying amount of data" \

# --one_shot # Exits the uploader once its finished uploading

#Run the above line by taking of the comments for the last four lines to upload the experiments to tensorboard

My TensorBoard Experiments are avaliable at : https://tensorboard.dev/experiment/0ZGWuA1vTv2NAdhoRmATDw/#scalars

# !tensorboard dev list

# TO delete a particular experiment

#!tensorboard dev delete --experiment_id {type the id here}