Transfer Learning with TensorFlow : Feature Extraction

Notebook demonstrates Transfer Learning using Feature Extraction in TensorFlow

- Transfer Learning with TensorFlow : Feature Extraction

- Downloading and getting familiar with data

- Creating data loader (preparing the data)

- Setting up callbacks (things to run whilst our model trains)

- Creating models using Tensorflow Hub

- Creating and testing ResNet TensorFlow Hub Feature Extraction model

- Creating and testing EfficientNetB0 TensorFlow Hub Feature Extraction model

- Different types of transfer learning

- Comparing our model's results using TensorBoard

- References:

Transfer Learning with TensorFlow : Feature Extraction

This Notebook is an account of my working for the Udemy course :TensorFlow Developer Certificate in 2022: Zero to Mastery.

Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task.

It is a popular approach in deep learning where pre-trained models are used as the starting point on computer vision and natural language processing tasks given the vast compute and time resources required to develop neural network models on these problems and from the huge jumps in skill that they provide on related problems.

Concepts covered in this Notebook:

- Introduce transfer learning with TensorFlow

- Using a small dataset to experiment faster(10% of training samples)

- Building a transfer learning feature extraction model with TensorFlow Hub.

- Use TensorBoard to track modelling experiments and results

Why use transfer learning?

- Can leverage an existing neural network architecture proven to work on problems similar to our own

- Can leverage a working network architecture which has already learned patterns on similar data to our own (often results in great ML products with less data)

Example of tranfer learning use cases:

- Computer Vision (using ImageNet for our Image classification problems)

- Natural Language Processing (detecting spam mails - spam filter)

import zipfile

# Download the data

!wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_10_percent.zip

# Unzip the downloaded file

zip_ref = zipfile.ZipFile("10_food_classes_10_percent.zip")

zip_ref.extractall()

zip_ref.close()

import os

# Walk through 10 percent data dir and list number of files

for dirpath, dirnames, filenames in os.walk("10_food_classes_10_percent)"):

print(f"There are {len(dirnames)} directories and {len(filenames)} images in '{dirpath}'.")

from tensorflow.keras.preprocessing.image import ImageDataGenerator

IMAGE_SHAPE = (224,224)

BATCH_SIZE = 32

train_dir = "10_food_classes_10_percent/train"

test_dir = "10_food_classes_10_percent/test"

train_datagen = ImageDataGenerator(rescale=1/255.)

test_datagen = ImageDataGenerator(rescale = 1/255.)

print("Training images:")

train_data_10_percent = train_datagen.flow_from_directory(train_dir,

target_size = IMAGE_SHAPE,

batch_size = BATCH_SIZE,

class_mode = "categorical")

print("Testing Images:")

test_data = test_datagen.flow_from_directory(test_dir,

target_size = IMAGE_SHAPE,

batch_size = 32,

class_mode ="categorical")

Setting up callbacks (things to run whilst our model trains)

Callbacks are extra functionality you can add to your models to be performed during or after training. Some of the most popular callbacks:

- Tracking experiments with the

TensorBoardcallback - Model checkpoint with the

ModelCheckpointcallback - Stopping a model from training (before it trains too long and overfits) with the

EarlyStoppingcallback

Some popular callbacks include:

| Callback name | Use case | Code |

|---|---|---|

| TensorBoard | Log the performance of multiple models and then view and compare these models in a visual way on TensorBoard (a dashboard for inspecting neural network parameters). Helpful to compare the results of different models on your data | tf.keras.callbacks.TensorBoard() |

| Model checkpointing | Save your model as it trains so you can stop training if needed and come back to continue off where you left. Helpful if training takes a long time and can't be done in one sitting | tf.keras.callbacks.ModelCheckpoint() |

| Early Stopping | Leave your model training for an arbitary amount of time and have it stop training automatically when it ceases to improve. Helpful when you've got a large dataset and don't know how long training will take | tf.keras.callbacks.EarlyStopping() |

import datetime

import tensorflow as tf

def create_tensorboard_callback(dir_name, experiment_name):

log_dir = dir_name + "/" + experiment_name + "/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir = log_dir)

print(f"Saving TensorBoard log files to : {log_dir}")

return tensorboard_callback

Note: You can customize the directory where your TensorBoard logs (model training metrics) get saved to whatever you like. The

log_dirparameter we've created above is only one option.

efficientnet_url = "https://tfhub.dev/google/efficientnet/b0/classification/1"

resnet_url = "https://tfhub.dev/google/imagenet/resnet_v2_50/feature_vector/5"

import tensorflow as tf

import tensorflow_hub as hub

from tensorflow.keras import layers

def create_model(model_url, num_classes = 10):

"""

Takes a TensorFlow Hub URL and creates a Keras Sequential model with it

Args:

model_url(str): A TensorFlow Hub features extraction URL.

num_classes(int) : Number of output neurons in the output layer,

should be equal to number of target classes, default 10.

Returns:

An uncompiled Keras Sequential model with model_url as feature extractor

layers and Dense output layers with num_classes output neurons.

"""

# Download the pretrained model and save it

feature_extractor_layer = hub.KerasLayer(model_url,

trainable =False,

name = "feature_extraction_layer",

input_shape = IMAGE_SHAPE+(3,)) # Freeze the already learned parameters

# Create our model

model = tf.keras.Sequential([

feature_extractor_layer,

layers.Dense(num_classes, activation = "softmax", name = "output_layer")

])

return model

resnet_model = create_model(resnet_url,

num_classes=train_data_10_percent.num_classes)

resnet_model.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(),

metrics = ["accuracy"])

resnet_model.summary()

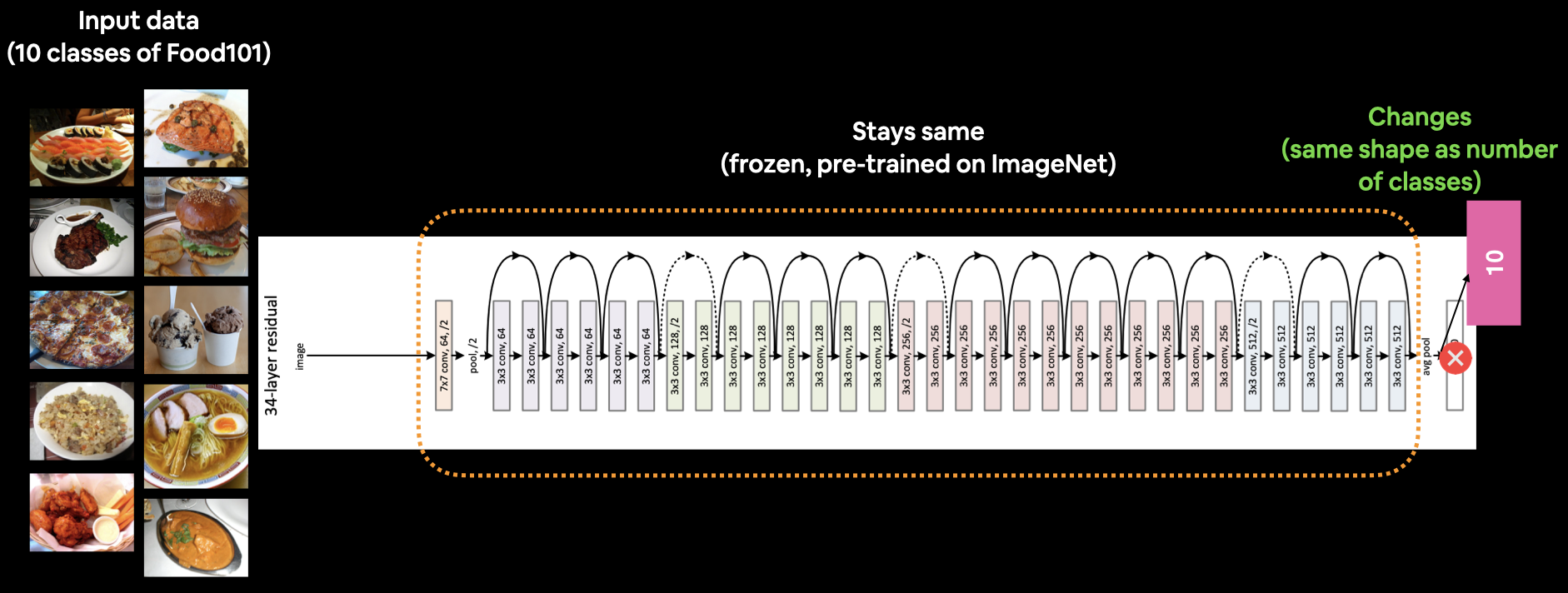

whoaa..the number of parameters are ~23 Million. But only ~20,000 are trainable parameters other are from the freeze model we uploaded from the tensorflow hub. Here's look at the Resnet50 architecture:

What our current model looks like. A ResNet50V2 backbone with a custom dense layer on top (10 classes instead of 1000 ImageNet classes). Note: The Image shows ResNet34 instead of ResNet50. Image source: https://arxiv.org/abs/1512.03385.

What our current model looks like. A ResNet50V2 backbone with a custom dense layer on top (10 classes instead of 1000 ImageNet classes). Note: The Image shows ResNet34 instead of ResNet50. Image source: https://arxiv.org/abs/1512.03385.

resnet_history = resnet_model.fit(train_data_10_percent,

epochs = 5,

steps_per_epoch =len(train_data_10_percent),

validation_data = test_data,

validation_steps = len(test_data),

callbacks = [create_tensorboard_callback(dir_name="tensorflow_hub",

experiment_name ="resnet50V2")])

Our transfer learning feature extractor model outperformed all of the previous models we built by hand. We have achieved around ~91% accuracy!! with only 10% of the data. we have a validation accuracy ~77%

# we can put a function like this into a script called "helper.py and import "

import matplotlib.pyplot as plt

plt.style.use('dark_background') # set dark background for plots

def plot_loss_curves(history):

"""

Returns training and validation metrics

Args:

history : TensorFlow History object.

Returns:

plots of training/validation loss and accuracy metrics

"""

loss = history.history["loss"]

val_loss = history.history["val_loss"]

accuracy = history.history["accuracy"]

val_accuracy = history.history["val_accuracy"]

epochs = range(len(history.history["loss"]))

# Plot loss

plt.plot(epochs, loss, label = "training_loss")

plt.plot(epochs, val_loss, label = "validation_loss")

plt.title("Loss")

plt.xlabel("Epochs")

plt.legend()

# Plot Accuracy

plt.figure()

plt.plot(epochs, accuracy, label ="training_accuracy")

plt.plot(epochs, val_accuracy, label = "validation_accuracy")

plt.title("Accuracy")

plt.xlabel("Accuracy")

plt.legend();

plot_loss_curves(resnet_history)

efficientnet_model = create_model(model_url=efficientnet_url,

num_classes=train_data_10_percent.num_classes)

# Compile the EfficientNet model

efficientnet_model.compile(loss = "categorical_crossentropy",

optimizer = tf.keras.optimizers.Adam(),

metrics = ["accuracy"])

# Fit EfficientNet model to 10% of training data

efficientnet_history = efficientnet_model.fit(train_data_10_percent,

epochs =5,

steps_per_epoch =len(train_data_10_percent),

validation_data = test_data,

validation_steps = len(test_data),

callbacks = [create_tensorboard_callback(dir_name ="tensorflow_hub",

experiment_name="efficientnetb0")])

We have done really well with EfficientNetb0 model. we have training accuracy ~91% and validation accuracy ~84%.

plot_loss_curves(efficientnet_history)

efficientnet_model.summary()

resnet_model.summary()

The EfficientNet architecture performs really good even though the number of parameters in the model are far less than ResNet model.

len(efficientnet_model.layers[0].weights)

our Neural network learns these weights/parameters for extracting and generalizing features for better prediction on new data

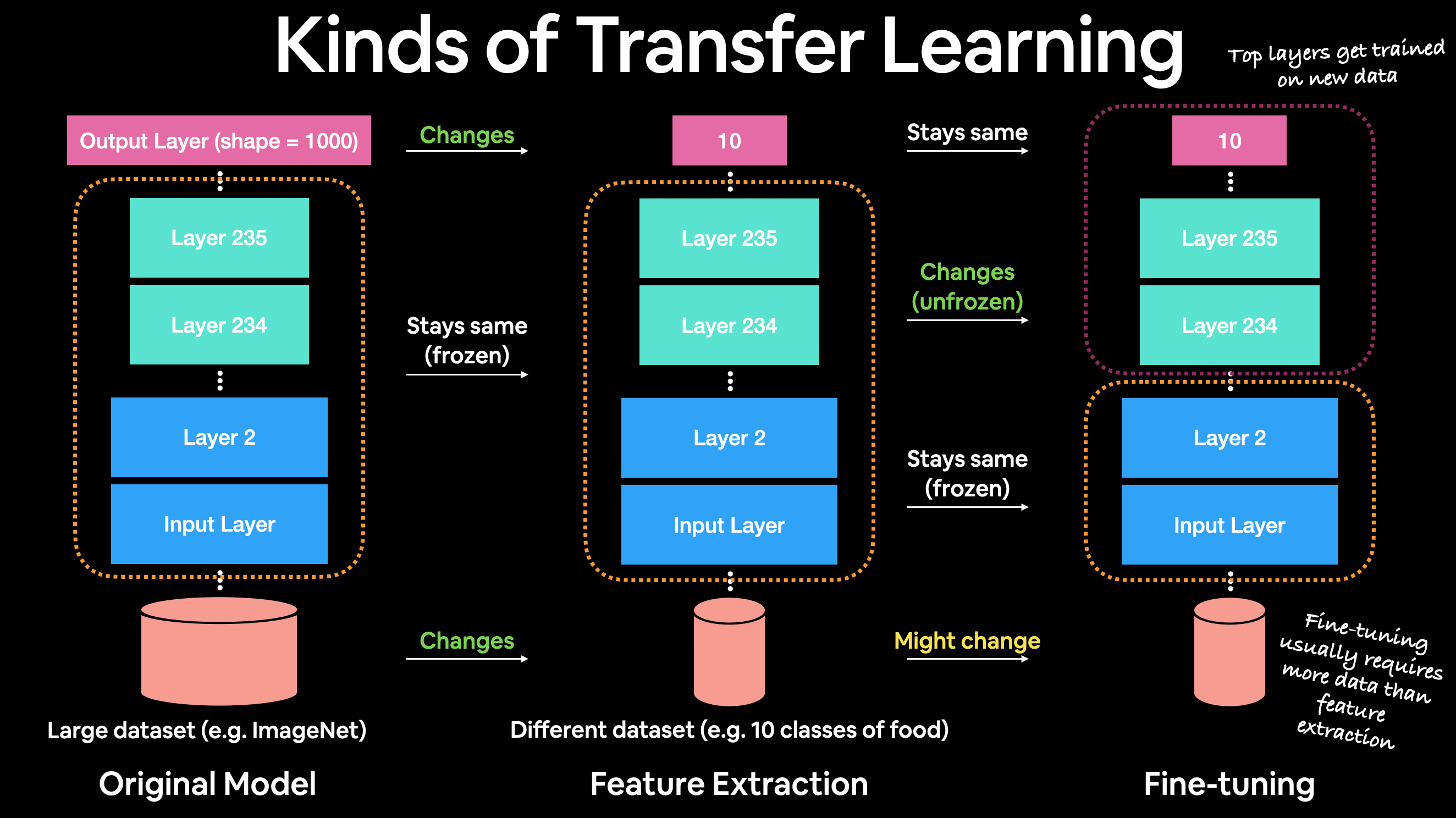

Different types of transfer learning

- "As is" transfer learning - using an existing model with no changes(eg. using ImageNet model on 1000 ImageNet classes, none of your own)

- "Feature extraction" transfer learning - use the pre-learned patterns of an existing model (eg. EffiientNetB0 trained on ImageNet) and adjust the output layer for your own problem(eg. 1000 classes -> 10 classes of food)

- "Fine tuning" transfer learning - use the prelearned patterns of an existing model and "fine-tune" many or all of the underlying layers(including new output layers)

The different kinds of transfer learning. An original model, a feature extraction model (only top 2-3 layers change) and a fine-tuning model (many or all of original model get changed).

The different kinds of transfer learning. An original model, a feature extraction model (only top 2-3 layers change) and a fine-tuning model (many or all of original model get changed).

Comparing our model's results using TensorBoard

What is TensorBoard?

- A way to visually explore your machine learning models performance and internals.

- Host, track and share your machine learning experiments on TensorBoard.dev

Note: When you upload things to TensorBoard.dev, your experiments are public. So, if you're running private experiments (things you don't want other to see) do not upload them to TensorBoard.dev

# Upload TensorBoard dev records

!tensorboard dev upload --logdir ./tensorflow_hub/ \

--name "EfficientNetB0 vs. ResNet50V2" \

--description "Comparing two different TF Hub Feature extraction model architectures using 10% of the training data" \

--one_shot

!tensorboard dev list

# !tensorboard dev delete --experiment_id [copy your id here]

Weights&Biases also integreates with TensorFlow so we can use that as visualization tool.